Introduction to MLPerf Training

Generative AI has captured the public’s attention and imagination; its uses can be widespread and revolutionary. Large language models (LLMs) can perform language-related tasks and power the advanced conversational abilities of services such as ChatGPT, Gemini, Perplexity AI, and many more.

The MLPerf Training benchmark suite seeks to provide a standard means for measuring performance in machine learning (ML) model training, so naturally, the MLPerf Training working group has been watching these developments closely and considering how to expand the benchmark suite to cover emerging training scenarios.

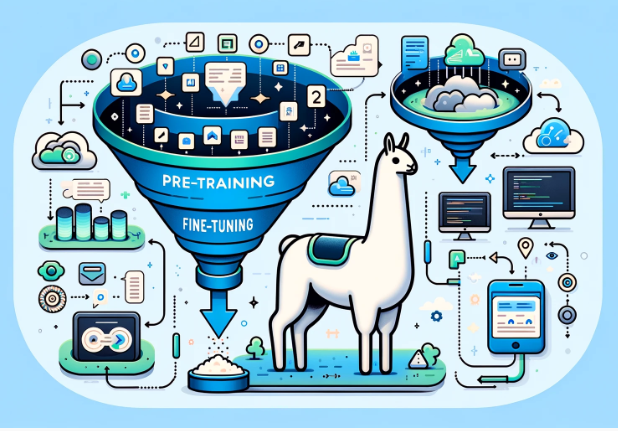

Training LLMs can be broadly classified into two phases: pre-training and fine-tuning.

- Pre-training is the crucial initial phase where the neural network is trained on a large and diverse dataset for generic language understanding such as grammar, idioms, facts, and the subtleties of different contexts. The dataset for pre-training typically consists of trillions of words from books, articles, websites, and social media. This phase is akin to developing a general understanding of language as the model learns to predict the next token based on past context. The MLPerf GPT-3 benchmark captures the performance of the pre-training phase of the LLM development life-cycle.

- Fine-tuning adapts a pre-trained model to specific tasks or domains by further training the model on a smaller, task-specific dataset to enhance its performance. This process boosts training efficiency by reducing computational intensity and enhances performance on specific tasks without starting training over from scratch.

Fine-tuning has been widely adopted by the industry because of its cost effectiveness and reduced infrastructure requirements which make it highly accessible. With this consideration in mind, the MLPerf Training working group formed a special task force in 2023 to explore and prioritize the inclusion of a fine-tuning benchmark in MLPerf Training.

Model selection

The task force evaluated numerous model candidates for inclusion, ranging from smaller models like Llama 2 7B and Mixtral 7B to mid-range models such as Falcon 40B, MPT 30B, and Llama 2 70B. After thorough evaluation and deliberation, the task force selected Llama 2 70B as the model that most aligned with its objectives.

Several factors contributed to this decision:

- Model diversity: One of the goals of MLPerf Training is to curate a small set of models with diverse characteristics so that the overall suite represents the much larger universe of trained models. With the right mix of models, the benchmark results can be generally and broadly useful. The existing MLPerf Training suite includes two language models: BERT (340 million parameters) and GPT-3 (175 billion parameters). The addition of Llama 2 70B (70 billion parameters) adds another distinct model size along with some architectural diversity thanks to features such as group query attention (GQA), SwiGLU activation function, and rotary embeddings.

- Community engagement: The task force considered Hugging Face contributions and leaderboards as solid indicators of community engagement and industry adoption. The Llama 2 70B model emerged as the frontrunner in terms of community engagement at the time of benchmark development. Since then, Meta has released Llama 3 70B, which was trained on a larger dataset with an improved tokenizer. However, the majority of the network layers are common across the two models, so per-iteration performance on Llama 2 will likely track closely to performance on Llama 3.

- Licensing flexibility: For an open benchmark consortium, model licenses must be flexible enough to offer unlimited access to participating submitters at minimum and, ideally, to the press and public in general. This requirement typically reduces the candidate pool to models (and datasets) with permissive licenses such as Apache 2.0, MIT, or similar. Thanks to Meta’s support, MLCommons is making the Llama 2 family of models available to MLCommons members for the purpose of conducting benchmarking with the MLPerf benchmark suites.

- Efficient benchmarking integration: Llama 2 70B offers easy deployment, simplifying benchmarking and integration for the task force. Also, model scales are growing exponentially, so large language models may not fit on a single accelerator anymore. To train on multiple accelerators, AI practitioners will have to employ methods like model parallelism to split the model efficiently across devices. Choosing a moderate-sized model like Llama 2 70B creates opportunities for submitters to showcase such software innovations.

Selecting a fine-tuning technique

Next, the task force investigated three different techniques for optimizing large language models for downstream tasks: supervised fine-tuning (SFT) which operates on training an entire model on curated datasets; reinforcement learning (RLHF) which utilizes human feedback to guide model training; and parameter-efficient fine tuning (PEFT) which updates only a subset of the model parameters.

PEFT stood out among these three approaches as the best fine-tuning technique for training because of its computational efficiency and architectural simplicity. Low-rank adaptation (LoRA) is a PEFT technique which freezes pre-trained model weights while injecting trainable low-rank decomposition matrices. We chose LoRA as the preferred fine-tuning technique for MLPerf Training due to its reduced parameter counts and memory footprint, efficient training process, lower barrier to entry, and popularity (which was likely driven by the prior factors.) Also, LoRA fine-tuning has different performance characteristics than existing MLPerf benchmarks.

Dataset selection

For this benchmark, the task force chose the SCROLLS (Standardized CompaRison Over Long Language Sequences) government report dataset. The dataset is made up of question-summary pairs based on reports written by government research agencies including Congressional Research Service and U.S. Government Accountability Office.

Context length is the amount of historical data the model uses to understand the context before making a forecast. Enabling LLMs to process and understand larger context lengths is crucial for capturing nuanced relationships and generating coherent and relevant text. The task force experimented with various context lengths and opted for the longest context that fit within a single, eight-accelerator system: 8192 tokens. That’s the equivalent of roughly 6,144 words and is significantly longer context than the existing language models in MLPerf Training, which have sequence lengths of 512 and 2048 for BERT and GPT3, respectively.

Benchmark technical details

The Llama 2 70B LoRA benchmark is based on the Hugging Face ecosystem and tools in order to represent a common developer experience. Hugging Face is one of the most widely used environments for fine-tuning due to the extensive and easy-to-use Transformers library, which provides researchers and developers with pre-trained models. Developers can adapt these models with user-friendly and well-documented APIs, enabling efficient and effective fine-tuning.

To ensure the representative nature of this benchmark, the task force followed the most common convention among Hugging Face users for fine-tuning LLMs by leveraging AutoModelForCausalLM. AutoModelForCausalLM is a versatile tool provided by Hugging Face designed for tasks involving causal language modeling. This model is primarily used for generating text, where the goal is to predict the next word in a sequence, given the preceding words.

The MLPerf Training v4.0 benchmark also includes:

- Loss metric: The benchmark uses cross entropy of the next token prediction for the loss function (much like MLPerf GPT3).

- Target accuracy metric: The standard metric for text summarization is the ROUGE score. Due to the computationally intensive and time-consuming nature of computing ROUGE scores, the typical approach involves analyzing the convergence graph of the evaluation loss. Once convergence is detected, ROUGE scores are computed to evaluate performance. Our testing of this method unveiled a strong correlation (R score of 0.9) between evaluation loss and ROUGE scores. Note that, unlike the training loss, the evaluation loss is calculated only over the target tokens.

- Selected region of convergence: To ensure fairness, each submitter is required to run the benchmark 10 times. Results are then scored using Olympic averaging.

Conclusion

In this blog post, we shared the insights, motivation, and process behind the creation of the new MLPerf Llama 2 70B LoRA fine-tuning benchmark. This benchmark is less computationally intensive than GPT-3, so it should serve as an excellent starting point for working with language models and lower the barrier to entry for MLPerf participants. We are thrilled by the community’s interest in the benchmark, as evidenced by 30 submissions in MLPerf Training v4.0 for this benchmark, and we hope to see this enthusiasm continue to grow.