Infra

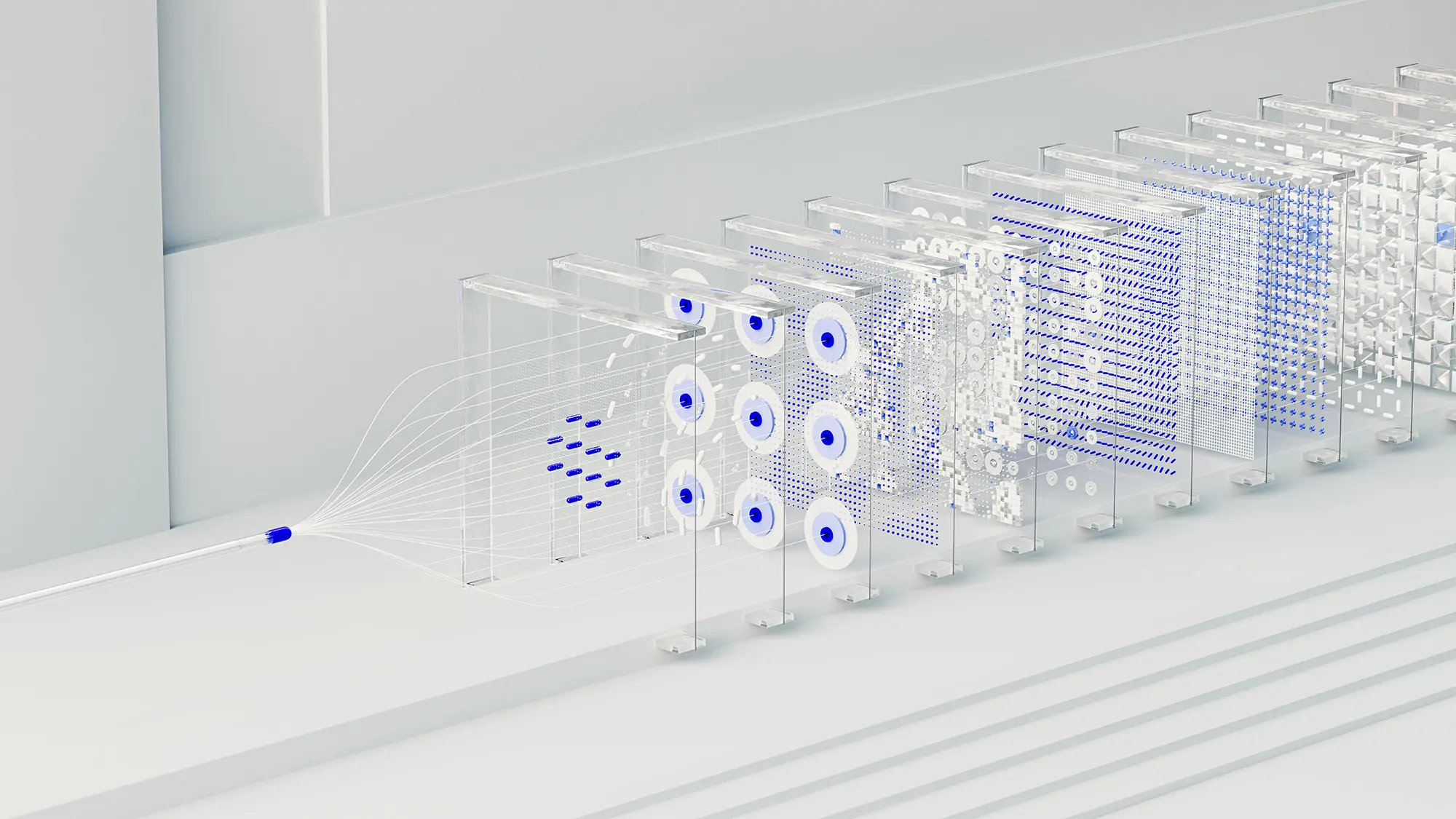

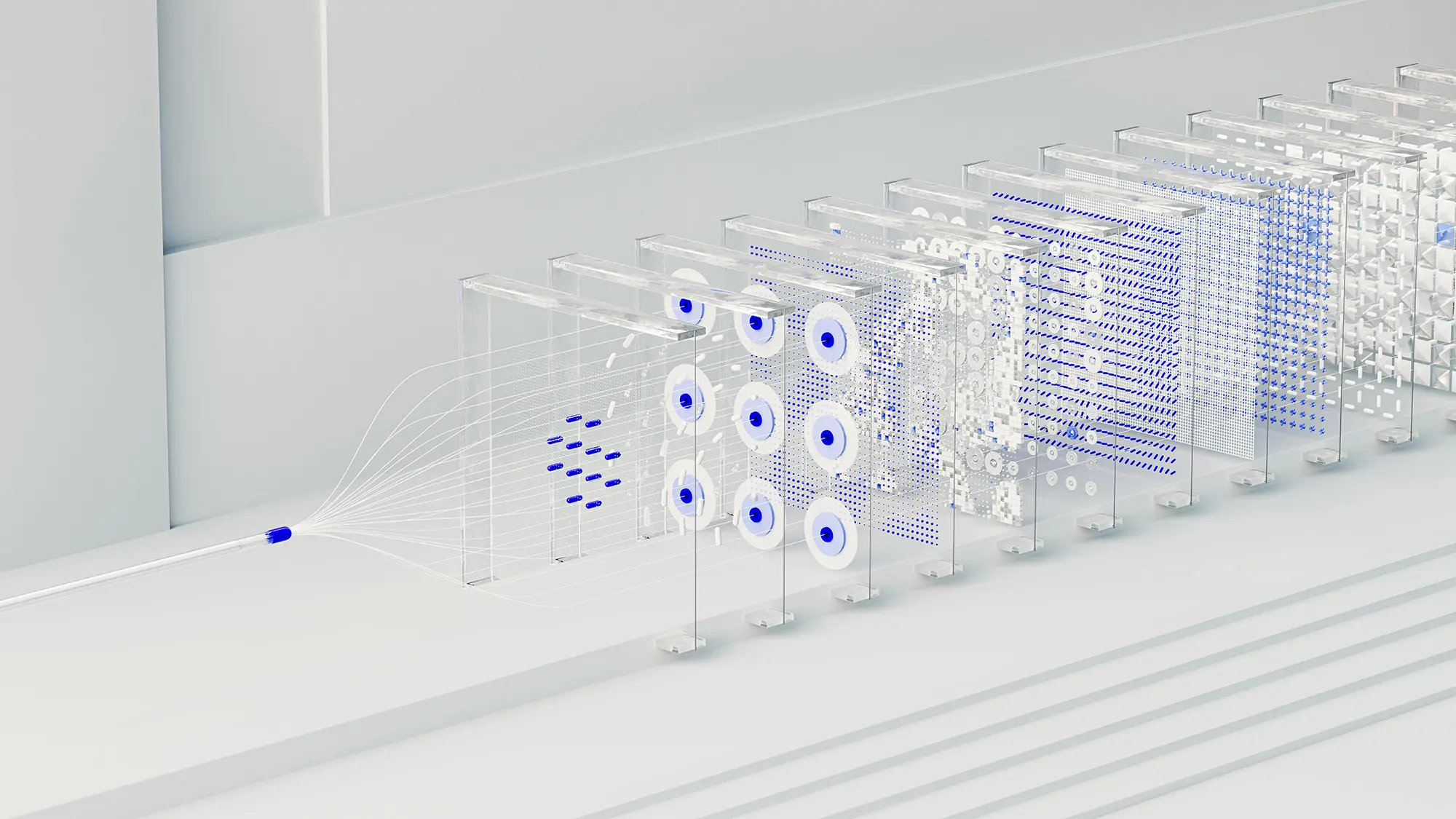

The Infra working group makes machine learning more reproducible and easier to manage for the broader community by building logging tools and recommending approaches for tracking and operating machine learning systems.

MLCommons working groups are collaborative groups of experts who define, develop, and conduct the MLPerf benchmarks and research projects.

The Infra working group makes machine learning more reproducible and easier to manage for the broader community by building logging tools and recommending approaches for tracking and operating machine learning systems.

The MLPerf Automotive working group defines and develops an industry standard ML benchmark suite for automotive.

The MLPerf Client working group defines and develops an application that contains a set of fair and representative machine-learning benchmarks for client consumer systems.

The MLPerf Inference working group creates a set of fair and representative inference benchmarks.

The Mobile working group creates a set of fair and representative inference benchmarks for mobile consumer devices such as smartphones, tablets, and notebooks that is representative of the end user experience.

The MLPerf Storage working group defines and develops the MLPerf Storage benchmarks to characterize performance of storage systems that support machine learning workloads.

The MLPerf Training working group defines, develops, and conducts the MLPerf Training benchmarks.

The MLPerf Tiny working group develops Tiny ML benchmarks to evaluate inference performance on ultra-low-power systems.

The Power working group creates power measurement techniques for various MLPerf benchmarks to enable reporting and energy consumption comparison, performance and power for benchmarks run on submission systems.

The Croissant working group develops a metadata format to standardize how ML datasets are described.

The Datasets working group creates new datasets to fuel innovation in machine learning.

The Medical working group develops benchmarks and best practices to help accelerate AI development in healthcare.

The MLCube working group aims to improve AI ease-of-use and to scale AI to more people.

The Algorithms working group creates a set of rigorous and relevant benchmarks to measure neural network training speedups due to algorithmic improvements.

The Chakra working group advances performance benchmarking and co-design using standardized execution traces.

The DMLR working group accelerates machine innovation and increases scientific rigor in machine learning by defining, developing, and operating benchmarks for datasets and data-centric algorithms, facilitated by a flexible ML benchmarking platform.

The Science working group evaluates, organizes, curates, and integrates artifacts around applications, models/algorithms, infrastructure, benchmarks, and datasets.