This week, members of the MLCommons® Power working group are presenting a paper at the 2025 IEEE International Symposium on High-Performance Computer Architecture (HPCA) conference. The paper presents MLPerf® Power, a framework for benchmarking the energy efficiency of AI systems.

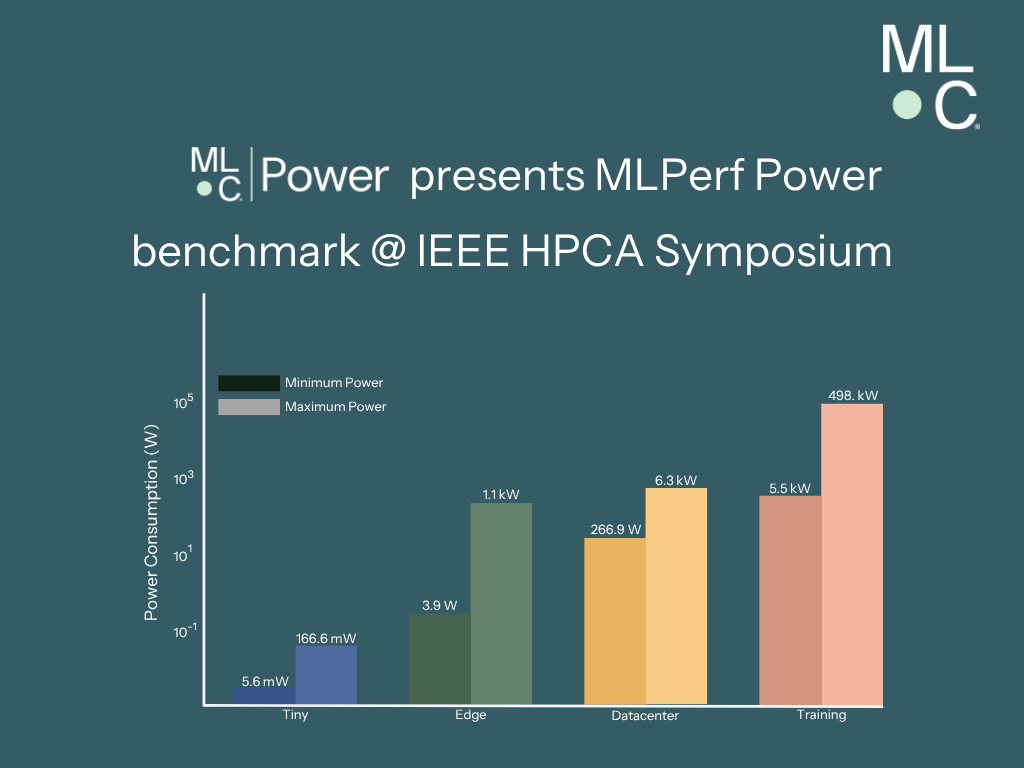

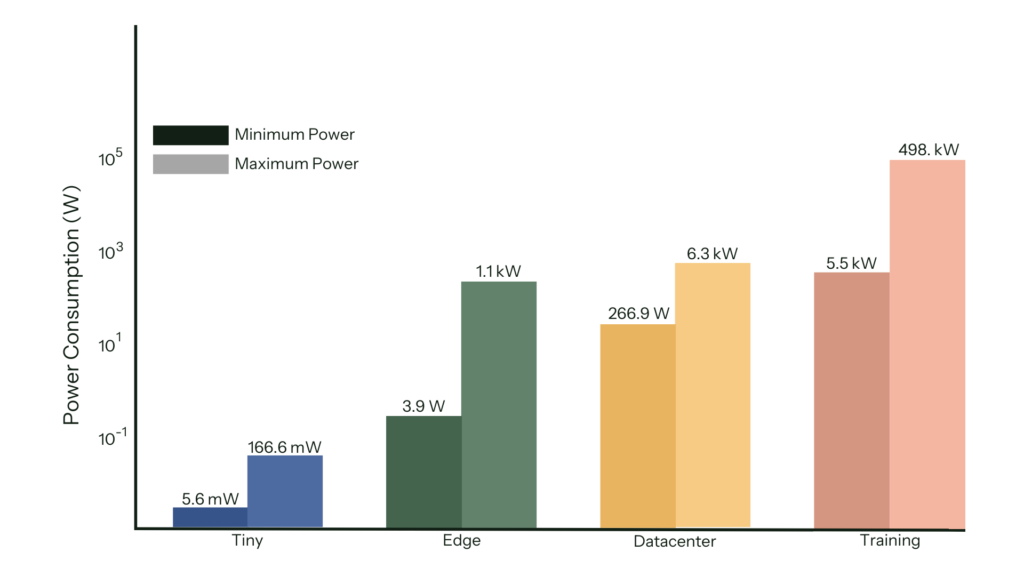

MLPerf Power is designed to measure energy efficiency across diverse applications and deployments of machine learning technologies, “from microwatts to megawatts.” That includes datacenters, edge deployments, mobile devices, and tiny “Internet of Things” implementations. It also includes a range of applications covering both training and inference.

“We cannot improve what we do not measure,” said Arun Tejusve (Tejus) Raghunath Rajan (Meta), Co-chair of the MLCommons Power working group. “Across the board, MLPerf has driven performance improvements for 6+ years. Our goal is to do the same for MLPerf Power. By providing a way to consistently measure and report power, we aim to move the needle of energy efficiency across the industry in a positive and sustainable manner.”

Fig. 1. The Power consumption range across MLPerf divisions, highlighting the need for scalable power measurement

Requirements for benchmarking energy efficiency

The MLPerf Power framework defines eleven requirements for benchmarking and measuring the energy efficiency of Machine Learning (ML) systems under three broad themes:

- Power measurements: ensuring that measurements are compatible with a wide range and scale of platforms and configurations, account for system-level interactions and shared resources, and where feasible, measure physical power with an appropriate analyzer.

- Diverse ML benchmarking: defining consistent rules and reporting guidelines, using industry-standard workloads, and collecting data on power consumption and performance at different throughputs and ML model accuracy/quality targets.

- Evaluation, Trends, and Impact: Communicating power consumption and energy efficiency trends and optimizations, providing case studies relevant to real-world scenarios, and being driven and audited by the industry.

The MLPerf Power framework addresses several myths and pitfalls related to measuring the power usage of AI systems. One is that measuring power consumption alone is sufficient. Instead, the benchmark focuses on measuring power efficiency: system performance per unit of power.

Another myth is that isolating and measuring just the ML components is sufficient. While they do make up a large portion of the power consumption, fully understanding AI system power efficiency requires measuring all the system components, including compute, memory/storage, the interconnect, and the cooling system. This requires a careful, comprehensive approach since different components vary their level of activity at different phases of an AI workload.

MLPerf Power benchmark submissions provide early and impactful insights

ML Commons has received 1,841 MLPerf Power benchmark submissions to-date, spanning several years and four workload versions. The benchmark results are already providing useful insights that inform the design of future AI systems.

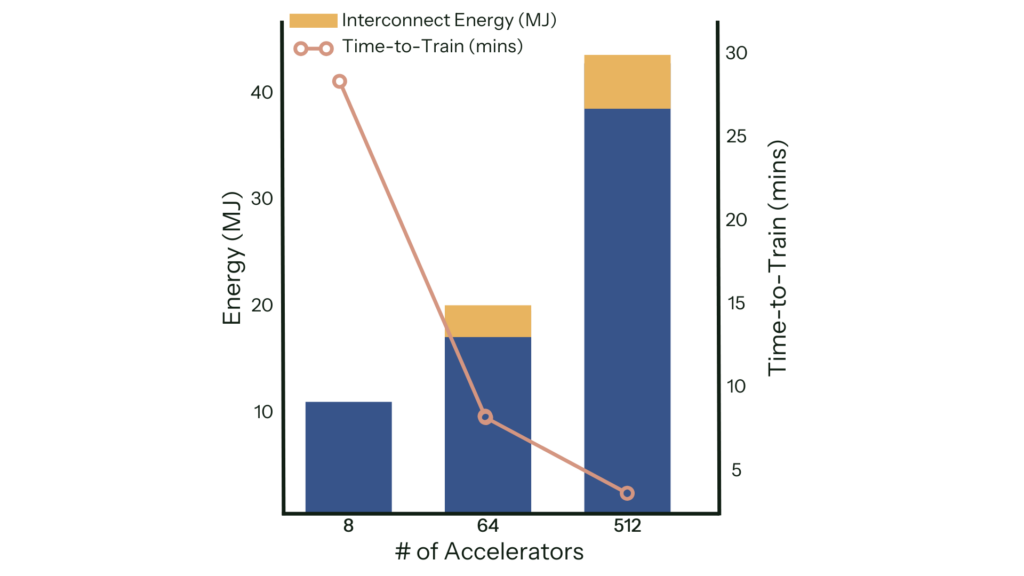

One important insight relates to scaling up training systems and the complex relationship between system scale, training time, and energy consumption. Specifically, scaling up the number of accelerators reduces absolute training time, but the amount of energy consumed increases at a non-linear rate. This is due to two factors: the increased communication requirements between a larger set of accelerators; and the decreased utilization in each accelerator. This points to the need to design AI systems with an understanding of these tradeoffs to achieve the necessary balance between scale, speed and energy consumption. “In the AI era, where scaling laws are driving computational demands, energy efficiency is a fundamental imperative for sustainable and responsible technological advancement,” noted Sachin Idgunji (NVIDIA), founding Co-Chair of the MLCommons Power working group. “Benchmarks such as MLPerf Power give AI system builders the information they need to help them achieve their design goals.”

Fig.2: Energy consumption and time-to-train for Llama2-70b LoRA fine-tuning across different numbers of accelerators.

Another insight derived from the MLPerf Power results involves the relationship and trade-off between AI model accuracy and energy efficiency. In earlier versions of the benchmark, results showed that organizations were sacrificing energy efficiency – by up to 50% – to increase inference accuracy from 99% to 99.9%. We believe that these benchmark results compelled organizations to deploy significant optimization techniques. In more recent benchmark versions, we now see that reduced precision techniques such as quantization have significantly narrowed the gap in energy efficiency between inference systems running at 99% and 99.9% accuracy. Moving forward, it’s expected that these techniques will continue to be a driving force in designing energy efficient AI systems.

The Path to Environmentally Sustainable AI Systems

Establishing an industry-wide standard for ML system power measurement is a critical step toward creating a set of metrics for what qualifies as “environmentally sustainable AI”.

“That’s our north star,” said Vijay Reddi, Professor at Harvard University and Vice President of MLCommons. “Our mission is to enable building sustainable AI systems, but to do so we need to define metrics that we can use to quantify energy efficiency – and ultimately sustainability. MLCommons is an engineering organization; we build things. We’ve built the framework, the metrics, the benchmark, and the benchmarking process to get us the data we need to understand, quantify, and ultimately build sustainable AI systems, and we’re continuing to iterate and refine it to keep pace with technology.”

The HPCA paper makes several recommendations, including developing tools that can estimate the carbon footprint of an AI system. Doing so requires measuring the system’s energy efficiency, but it must also be contextualized in several other factors, including the nature of the workload as well as the carbon emissions of the system’s power source.

In the short term, the team’s most critical need is for more submissions to the MLPerf Power benchmark. “The roughly 1,800 submissions we have now is enough to deliver a first set of qualitative insights,” said Arya Tschand, PhD student at Harvard University who led the work, “but if we can increase that by an order of magnitude we can extrapolate the data further, identify and quantify additional trends, and make more specific and actionable design recommendations that are grounded in hard data. That benefits both the industry and the environment: higher energy efficiency saves AI companies money by reducing their substantial power bills, and it also reduces the demand for both existing and new power generation.”

Visit the MLPerf Power working group page to join the working group and learn more, including how to submit to the MLPerf Power Benchmark.

About ML Commons

MLCommons is the world’s leader in AI benchmarking. An open engineering consortium supported by over 125 members and affiliates, MLCommons has a proven record of bringing together academia, industry, and civil society to measure and improve AI. The foundation for MLCommons began with the MLPerf benchmarks in 2018, which rapidly scaled as a set of industry metrics to measure machine learning performance and promote transparency of machine learning techniques. Since then, MLCommons has continued using collective engineering to build the benchmarks and metrics required for better AI – ultimately helping to evaluate and improve AI technologies’ accuracy, safety, speed, and efficiency.