Some of the most exciting applications of AI currently being developed are within the medical domain, including building models to analyze and label X-rays, MRIs, and CT scans. As digital radiography has become ubiquitous, the amount of imaging data generated has exploded. This is fueling the development of AI tools to help experts improve the accuracy of analysis of health-related issues, and to reduce the time it takes to complete it. At the same time, global regulations to protect consumers’ health data have been imposed, making it more challenging for AI developers to gain access to enough aggregated data to effectively build generalizable AI models, as well as to evaluate their quality and performance.

The MLCommons® Medical working group has been developing best practices and open tools for the development and evaluation of such AI applications in healthcare, while conforming to health data protection regulations. In particular, it has used an approach that encompasses both federated learning and federated evaluation.

Federated learning and federated evaluation in healthcare

Federated learning is a machine learning approach that enables increased data access while reducing privacy concerns. With federated learning, rather than attempting to aggregate training data across multiple contributing organizations into a single location as a unified dataset, it allows the data to remain physically distributed across the organizations’ sites and under their control. Instead of bringing the data to the model, the model is brought to the data, and is trained in installments using the data available at each location. The result is the same as if all the data were trained in one location, but with federated learning, none of the raw data is ever shared, keeping the system compliant with data protection regulations.

Successful clinical translation requires further evaluation of the AI model’s performance. Borrowing from federated learning, federated evaluation is an approach to evaluate AI models on diverse datasets.. With federated evaluation the test data is physically distributed across contributing organizations and a fully-trained model, called a “system under test” (SUT), is passed from site to site. Evaluation results are then aggregated and shared across all participating organizations.

A medical research team wishing to do a complete medical study using AI will need to do both: federated learning to train an AI model, and federated evaluation to assess the results.

Using federated evaluation to benchmark medical AI models

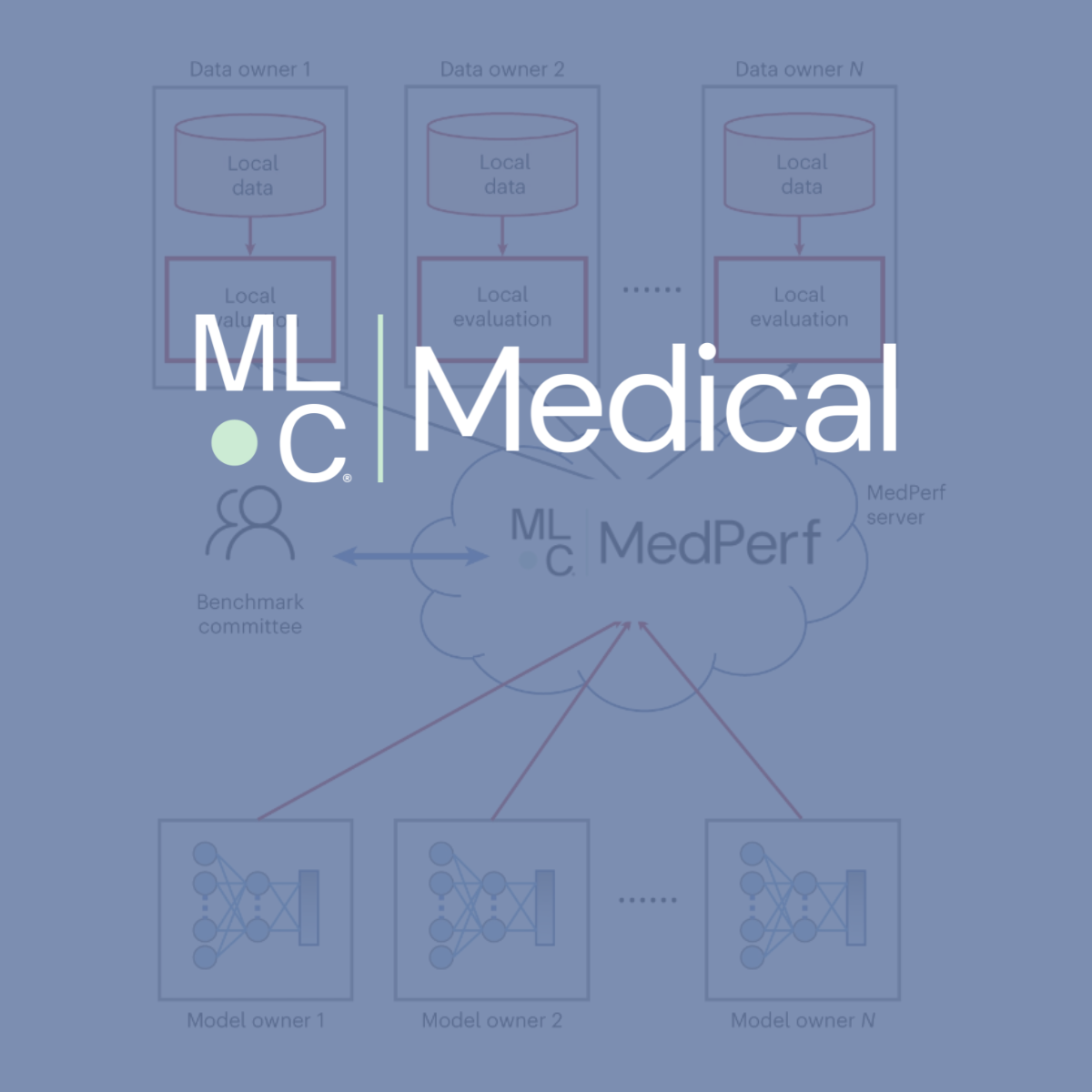

Federated evaluation is a core part of MLCommons’ MedPerf approach to benchmarking AI models for medical applications. MedPerf is an open benchmarking platform, which provides infrastructure, technical support and organizational coordination for developing and managing benchmarks of AI models across multiple data sources. It improves medical AI by enabling benchmarking on diverse data from across the world to identify and reduce bias, offering the potential to improve generalizability and clinical impact.

Through MedPerf, a SUT can be packaged up in a portable, platform-independent container called MLCube® and distributed to each participating data owner’s site where it can be executed and evaluated against local data. MLCubes are also used to distribute and execute a module that properly prepares test data according to the benchmark specifications, and finally an evaluation module manages the evaluation process itself. With MedPerf’s orchestration capabilities,researchers can evaluate multiple AI models through the same collaborators in hours instead of months, making the evaluation of AI models more efficient and less error-prone.

Federated evaluation on MedPerf. Machine learning models are distributed to data owners for local evaluation on their premises without the need or requirement to move their data to a central location. MedPerf relies on the benchmark committee to define the requirements and assets of federated evaluation for a particular use case (https://www.nature.com/articles/s42256-023-00652-2)

“It turns out that data preparation is a major bottleneck when it comes to training or evaluating AI. This issue is further amplified in federated settings where quality and consistency between contributing datasets is harder to assure due to the decentralized setup,” said Alex Karagyris, MLCommons Medical working group co-chair. To address this the MLCommons’ Medical working group focused on developing best practices and tools to alleviate much of the prep burden by enabling data sanity checking and offering pre-annotation through pre-trained models during data preparation. This is tremendously important when running world clinical studies such as the FeTS one.

Partnering with RANO

The MLCommons Medical Working Group, Indiana University, and Duke University have partnered with the Response Assessment in Neuro-Oncology (RANO) cooperative group to pursue a large-scale study demonstrating the clinical impact of AI in assessing postoperative or post-treatment outcomes for patients diagnosed with glioblastoma, rare brain tumors. The second global Federated Tumor Segmentation Study (FeTS 2.0) is an ambitious project involving 50+ participating institutions. It is investigating important questions that hopefully will become a role model for running continuous AI-based global studies. For the MLCommons Medical working group, the FeTS 2.0 project is an opportunity to gain frontline experience with federated evaluation in a real-world medical study.

World map with institutions participating in the clinical study that have completed dataset registration on MedPerf. As of August 2024 fifty-one (51) institutions have joined. Map generated using Plotly Express and Google’s Places API.

For the FeTS 2.0 study, MLCommons is contributing its open-source tools MedPerf and GaNDLF (generally nuanced deep learning framework), along with engineering resources and technical expertise, through its contributing technical partners. MedPerf enables orchestration of AI experiments on federated datasets and GaNDLF delivers scalable end-to-end clinical workflows. Combined they make the mechanism of AI model development, training, and inference more stable, reproducible, interpretable, and scalable, without requiring an extensive technical background.

Since the FeTS 2.0 project requires both federated learning to train AI models and federated evaluation for testing, MedPerf has been extended to integrate with OpenFL, an open source framework for secure federated AI developed by Intel. OpenFL is an easy to use python-based library that enables organizations to train and validate machine learning models on private data without sharing that data with a central server. Data owners retain custody of their data at all times.

Dr. Evan Calabrese, Principal Investigator for the FeTS 2.0 study and Assistant Professor of Radiology at Duke University said, ”global community efforts, like the FeTS study, are essential for pushing the boundaries of clinically relevant AI. Moreover, getting the support and expertise of open community organizations, such as MLCommons, can tremendously improve the outcome and impact of global studies such as this one”.

“The partnership with RANO on FeTS 2.0 has been strong and mutually beneficial,” said Karagyris. “It’s enabled us to ensure that we are on the right path as we continue to develop MedPerf to become a standard for benchmarking AI systems in the medical domain. It has also given us key insights as to where we can add even more value in the future by solving hard problems.” One such challenge is ensuring the quality and consistency of annotations created by human experts; for example, in the case of the FeTS 2.0 project, the marking and segmentation of tumors on an image. Because the image data is held separately at several institutions and can’t be shared, each participating institution has typically tasked its own expert to annotate its portion of the data. However, having people of different experience levels across organizations invites inconsistencies and different levels of quality. The group is exploring multiple alternatives to alleviate this. From deploying pretrained models to assess annotations, to measuring the expertise level, all the way to enabling a pool of experts in a central location to annotate data remotely across institutions, improving both quality and consistency.

Looking forward

“Our ambition extends well beyond the FeTS 2.0 study; through MedPerf we are building open-source benchmarks and solutions to broadly enable evaluation of AI models across healthcare,” said Karagyris. “Everyone in the field benefits from independent, objective benchmarks of AI’s application to medicine that let us measure how much and how quickly the systems are getting better, faster, and more powerful. The path to advancing the state of the art in medical AI is by opening avenues for institutions to collaborate and contribute data to medical studies as well as to collective efforts such as MLCommons benchmarking, while ensuring that they remain compliant with regulations protecting health data.”

Read the Full FeTS study report

In the spirit of transparency and openness, the group created a full report that describes current progress, technical details, challenges, and opportunities. We believe that sharing our experiences with the broad community can help facilitate future improvements in current practices.

We aim to share more updates with the community as the study progresses.

Join Us

MLCommons welcomes additional community participation and feedback on its ongoing work. You can join the AI Medical working group here.