As increasingly complex large language models (LLMs) emerge, the need for benchmarks that accurately reflect real-world applications becomes more pressing. MLPerf aims to select representative models widely used by customers in the industry, ensuring that the benchmarks are both realistic and relevant. By doing so, MLPerf provides valuable insights that help consumers make informed decisions about application deployment and budgeting. Additionally, these benchmarks enable vendors to optimize workloads effectively, catering to the practical needs of their customers while navigating the evolving AI technology landscape.

Building on LLM benchmarks already in MLPerf Inference suite

The MLPerf Inference suite already contains GPT-J and Llama 2 70B large language models, which were added in the v3.1 and v4.0 rounds, respectively. We set out to expand on the existing LLM benchmarks in the MLPerf Inference v4.1 suite by incorporating a mixture of experts (MoE) model.

The MoE architecture is behind some of the most popular and powerful large language models (LLMs) in the field, making it an ideal candidate for inclusion in our benchmark suite. MoE models are particularly appealing due to their ability to manage computational resources efficiently while maintaining high performance. By adding an MoE model, we can provide a more comprehensive evaluation of LLM capabilities that reflects the latest advancements in AI technology.

For readers interested in learning more about mixture of experts, please refer to the detailed explanation available in this article: Mixture of Experts Explained (huggingface.co).

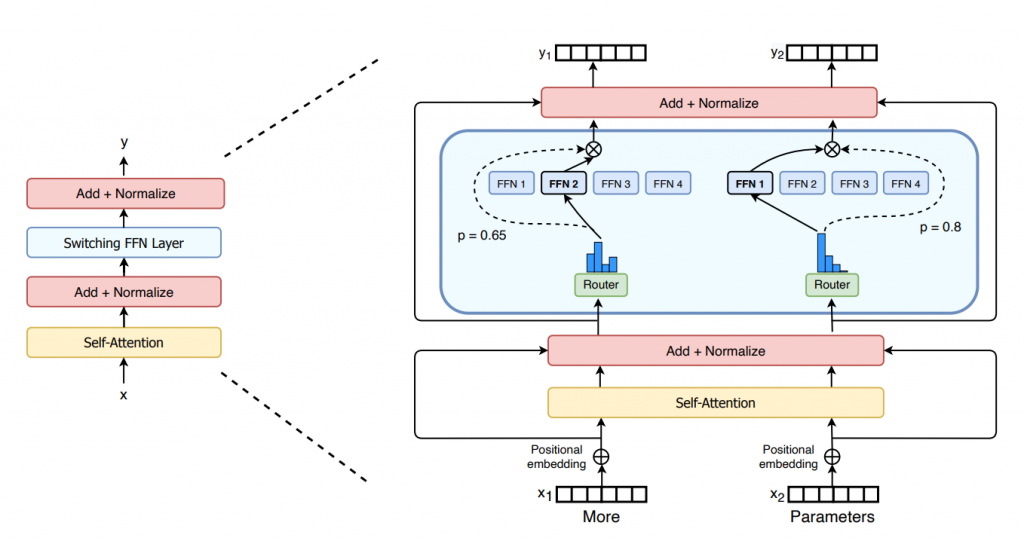

Illustration of the sparse Switch FFN layer in the mixture of experts network architecture (from the Switch Transformers paper).

The multi-faceted ability of the MoE model requires sophisticated accuracy and performance testing that requires going beyond the single-task evaluation that has been the norm for MLPerf Inference benchmarks to date.

To take on these challenges, the MLPerf Inference working group created a technical task force to study the workload and agree on a representative benchmark. The task force goals were:

- Understand how MoE models are used in the industry

- Survey MoE models and identify those that can be used for MLPerf MoE benchmark

- Choose an MoE model for the benchmark

- Identify tasks and datasets for the benchmark

- Create and test the reference implementation

Below, we describe how and why the task force evaluated the choices that led to the creation of the new MoE benchmark.

Model selection

In choosing models for the MLPerf Inference benchmark, we considered several mixture of experts (MoE) models, including DBRX, Grok, Mixtral 8x7B, and Mixtral 8x22B. Ultimately, Mixtral 8x7B was selected due to its widespread deployment and strong problem-solving capabilities across various domains. Mixtral 8x7B has gained popularity for its robust performance in handling diverse tasks, making it a good candidate for evaluating reasoning abilities. Its versatility in solving different types of problems provides a reliable basis for assessing the model’s effectiveness and enables the creation of a benchmark that is both relevant and comprehensive. In addition, Mixtral 8x7B’s size strikes a solid balance between performance and accuracy; it also allows for implementations across a wide range of processors.

Dataset and task selection

Using a single task for evaluation would not be sufficient to capture the full spectrum of the capabilities of a MoE model. Therefore, we thoroughly examined available literature to identify multiple tasks to include in the benchmark that would be both challenging and representative of real-world applications. Three were selected – Q&A, math reasoning, and coding – and we explain the rationale and selection processes in the following sections. The dataset for this combination of tasks is composed of 5,000 samples for each task, making the final dataset 15,000 samples.

Q&A task

For the Q&A benchmark dataset, we continue to use the Open Orca dataset, which aligns with the approach taken by our Llama 2 70B benchmark. Open Orca is recognized for its high quality and extensive coverage, making it an excellent choice for evaluating the question-answering capabilities of LLMs. This dataset combines augmented data from the FLAN Collection with a million GPT-4 completions, providing a rich resource for assessing natural language processing abilities.

We randomly sampled 5000 samples after filtering the original dataset following the curation methodology. We used the ROUGE metrics for accuracy measurement, following in the footsteps of MLPerf Inference v4.0.

For more details, please refer to Llama 2 70B: An MLPerf Inference Benchmark for Large Language Models – MLCommons.

| Question:Leo: If “A woman sitting on some steps smokes a cigarette while accessing her laptop.” does that mean that “A person sitting on some steps smokes a cigarette while accessing her laptop.”?Options:- yes- it is not possible to tell- no Answer:Yes, the sentence “A woman sitting on some steps smokes a cigarette while accessing her laptop” can be changed to “A person sitting on some steps smokes a cigarette while accessing her laptop.” The gender of the person has been changed from specifically a woman to a person in general. However, it is important to note that the pronoun “her” is still used, which indicates that the person referred to is female. If you want to make the sentence completely gender-neutral, you could change “her laptop” to “the laptop” or “a laptop.” |

A sample question from the open_orca dataset (GPT4 partition), and the response of Mixtral 8x7B.

Math reasoning task

We selected GSM8K (Grade School Math 8K) as the math reasoning dataset to evaluate the reasoning capabilities of LLMs like Mixtral 8x7B. GSM8K consists of 8,792 high-quality, linguistically diverse, grade school math word problems that require multi-step reasoning to solve. These problems are designed to be solvable by a bright middle school student and primarily involve performing a sequence of elementary calculations using basic arithmetic operations. The dataset’s importance lies in its ability to assess an LLMs capacity for complex problem-solving, as the questions typically require two to eight steps to reach the final answer. We randomly sampled 5,000 questions from the dataset.

In setting up the benchmarks, we utilize exact match (EM) as the evaluation score, which measures the model’s ability to produce the precise correct answer, reflecting its accuracy in reasoning and computation. Additionally, a 5-shot approach is employed, where the model is provided with five examples before attempting to solve the problems. This method helps gauge the model’s ability to learn from limited examples and apply that learning to new, similar tasks, further highlighting its reasoning prowess.

| Question:The square footage of the two bedrooms in the apartment that Jenny and Martha share totals 300 square feet. If Jenny’s bedroom is 60 square feet larger than Martha’s, how large, in square feet, is Martha’s bedroom? Answer:Let’s denote the size of Martha’s bedroom as M. Jenny’s bedroom is then M + 60 square feet. The total size of the two bedrooms is 300 square feet. So we have the equation M + (M + 60) = 300. Solving this equation for M, we get 2M + 60 = 300, which simplifies to 2M = 240, so M = 120. The answer is 120 square feet. |

A sample question from the GSM8K, and the response of 5-shot Mixtral 8x7B.

Coding task

For the code generation task, the task force chose the MBXP (Mostly Basic X [Programming language] Problems) dataset created by the AWS AI Labs team. We came to this decision after evaluating many popular code generation benchmarks such as MBPP (Mostly Basic Python Problems), HumanEval, Multi-HumanEval, CoNaLa, and CodeAlpaca. We chose MBXP because of its wide range of languages and longer input prompts.

MBXP extends the popular MBPP dataset by including 12 other languages: C++, Javascript, Typescript, Kotlin, Scala, Php, Ruby, C#, Go, Swift, and Perl. The same core problems in MBPP are included, but they are extended with the function signature in the language of choice, which hints at which language the model should use.

We made several modifications to the dataset when adapting it for our use. The first was to apply the Mistral Prompt Format to the prompts present in the dataset to allow the model to better understand the task it was being presented with. Furthermore, we appended the following footer to the prompt “Here’s the completed code:nn“`$LANGUAGE” after discovering that Mixtral 8x7B’s code generation performance sharply decreases when generating code outside of markdown blocks.

In many code generation benchmarks, the metric used to evaluate performance is pass@k, which allows the language model k to attempt to generate code that successfully passes a set of tests. The MBXP benchmark utilizes this metric by providing a comprehensive series of tests for each problem in each language, all conveniently organized within the mxeval library. For each problem in the dataset, mxeval integrates the generated code with the corresponding test suite into a single file and executes it. A successful run, indicated by an exit code of 0, is marked as successful by mxeval. Conversely, if errors occur—such as test failures or issues with code compilation or interpretation—the run is marked as a failure. In our evaluation, we have opted to use pass@1 to minimize unnecessarily long inference times, ensuring a more efficient assessment process.

For the split of the final dataset, we selected a subset of 5000 samples. Instead of sampling equally from every language, we first decided to restrict our search to the languages in which Mixtral 8x7B is most fluent. The reasoning behind this was that the accuracy metric aims to highlight accuracy regressions, which are well hidden in languages where accuracy is already poor. Furthermore, JVM-based languages were omitted as evaluating them led to long evaluation times. These restrictions lead us to sample problems from C++, Javascript, Typescript, PHP, Python, and Ruby, providing us with a mix of syntaxes (C-style versus more exotic syntaxes) and a mix of compiled and interpreted languages.

| Problem:<s> [INST] Complete the following code. Be concise, don’t output anything that isn’t necessary.#include <bits/stdc++.h>using namespace std; /** * Write a function to combine two given sorted lists using heapq module. * > combineLists(vector<int>{1, 3, 5, 7, 9, 11}, vector<int>{0, 2, 4, 6, 8, 10}) * {0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11} * > combineLists(vector<int>{1, 3, 5, 6, 8, 9}, vector<int>{2, 5, 7, 11}) * {1, 2, 3, 5, 5, 6, 7, 8, 9, 11} * > combineLists(vector<int>{1, 3, 7}, vector<int>{2, 4, 6}) * {1, 2, 3, 4, 6, 7} */vector<int> combineLists(vector<int> num1, vector<int> num2) { [/INST]Here’s the completed code: “`cpp Solution: #include <bits/stdc++.h>using namespace std; vector<int> combineLists(vector<int> num1, vector<int> num2) { vector<int> result; merge(num1.begin(), num1.end(), num2.begin(), num2.end(), back_inserter(result)); return result;}“` |

A sample problem from MBXP, and the response of Mixtral 8x7B. The green text is inserted via preprocessing.

Performance metrics

We used the same performance metrics as those used in the Llama 2 70B benchmark: token throughput for the offline scenario, and TTFT+TPOT as the server scenario. For more information, please refer to the “Measuring performance of the LLM” section of Llama 2 70B: An MLPerf Inference Benchmark for Large Language Models – MLCommons.

Reference Implementation

For readers interested in running the MoE model themselves, we encourage them to follow the reference implementation, which contains code and instructions on how to run the entire benchmark end-to-end. This can be found in the following GitHub link.

Conclusion

In the MLPerf Inference v4.1 round, there were eight Mixtral 8x7B submissions with 34 results! We hope to see more submissions in subsequent rounds as this model becomes more popular with users and as more hardware and software options optimized for the model come to market.